Transitions in distributed intelligence

By Olaf Witkowski

This post is a piece I recently wrote for my own blog. I was convinced to also post it here, since it is an exciting topic, close to YHouse’s central questions. Here is the link to the original post.

“Is there such thing as being intelligent together?”

What is intelligence? How did it evolve? Is there such thing as being "intelligent together"? How much does it help to speak to each other? Is there an intrinsic value to communication? Attempting to address these questions brings us back to the origins of intelligence.

Maynard Smith & Szathmáry's Major Transitions in Evolution (1995)

Intelligence back at the origins

Since the origin of life on our planet, the biosphere – a.k.a. the sum of all living matter on our planet – has undergone numerous evolutionary transitions (John Maynard Smith and Eörs Szathmáry, Oxford University Press, 1995). From the first chemical reaction networks, it has successively reached higher and higher stages of organization, from compartmentalized replicating molecules, to eukaryotic cells, multicellular organisms, colonies, and finally (but one can't assume it's nearly over) cultural societies.

“Through every transition, life drastically modifies the way information is stored, processed and transmitted.”

Transitions in... information processing

For at least 3.5 billion years, the biosphere has been modifying and recombining the living entities that composed it, to form higher layers of organization, and transferring bottom-layer features and functions to the larger scale. For example, cells that now compose our body do not serve directly their own purpose, but rather work to contribute to our successful life goals as humans. Through every transition in evolution, life has drastically modified the way it stored, processed and transmitted information. This often led to new protocols of communication, such as DNA, cell heredity, epigenesis, or linguistic grammar, which will be the central focus further in this post.

Life on Earth's illustrated timeline, from its origins to nowadays.

Every living system as a computer

The first messy networks of chemical reactions that managed to maintain themselves were already “computers”, in the sense that they were processing information inputs from the surrounding chemical environment, and effecting this environment in return. Under that perspective, they already possessed a certain amount of intelligence. This may require a short parenthesis.

What do we mean by intelligence?

Octopodes show great dexterity and problem solving skills: they know how to turn certain difficult problems into easy ones. (Note that they also tend to hold Rubik's cubes in their favored arm, indicating that they are not "octidextrous".) Image credit: Bournemouth News.

Intelligence, in a computational terms, is nothing else but the capacity of solving difficult problems, with the minimal amount of energy. For example, any search problem can be solved by looking exhaustively at every possible place where a solution can hide. If instead, a method allows us to look just in a few places before finding a solution, it should be called more intelligent than the exhaustive search. Of course, you could put more “searching agents” on the task, but the intelligence measure remains the same: the least time required by the search, divided by the number of agents employed, the more efficient the algorithm, and the more intelligent the whole physical mechanism.

“Intelligence: turning a difficult problem into an easy one.”

This is not to say that intelligence is only one-dimensional. We are obviously ignoring very important parts of the story. This is all part of a larger topic which I'm intending to writing about in more detail soon, but you could summarize it for now by saying that intelligence consists in "turning a difficult problem into an easy one".

Transitions in intelligence

Image credit: Trends in Ecology and Evolution

Let's now backtrack a little, to where we were discussing evolutionary transitions. We now see the picture in which the first chemical processes already possessed some computational intelligence, in the sense we just framed. Does this intelligence grow through each transition? Did the transitions make it easier to solve problems? Did it turn difficult problems into easy ones?

The main problem for life to solve is typically the one of finding sources of free energy and converting them efficiently into work that helps the living entity preserve its own continued existence. If this is the case, then yes: the transitions seem to have made the problem easier. Each transition made living systems climb steeper gradients. Each transition modified information storage, processing and transmission so as to ensure that the overall processing was beneficial to preserve life, in the short or longer term (an argument by Dawkins on evolution of evolvability, which I'll also write more about in another post). And each transition made the problem into an easier one for living systems.

Bloody learning

The evolution of distributed intelligence: the jump from the Darwinian paradigm to connectionist learning allowed for learning to evolve on much shorter timescales.

A few billion years ago, when life was still made of individual organisms, learning was achieved mostly by bloodshed. With Darwinian selection, the basic way for a species to incorporate useful information in its genetic pool, was to have part of its population die. Very roughly, for half of its population, the species could get about one bit of information about the environment. It is obvious how inefficient this is, and this is of course still the case for all of life nowadays, from bacteria to fungi, and from plants to vertebrates. However, living organisms progressively learned to use different types of learning, based on communication. Instead of killing individuals in their populations, the processes started to "kill" useless information, and keep transferring the relevant pieces. Examples of new learning paradigms were for example connectionist learning: a set of interacting entities which were able to encode and update memories within a network. This permitted learning to evolve on much shorter timescales than replication cycles, which boosted substantially the ability of organisms to learn adapt to new ecological niches, recognize efficient behaviors, and predict environmental changes. The This is, in a nutshell, how intelligence became distributed.

Distributed intelligence

The general intuition is you can always accomplish more with two brains than just one. In an ideal world, you could divide the computation time by two. One condition though is that those two brains should be connected, and able to exchange information. The way to achieve that is through the establishment of some form of language to allow for concepts to be replicated from one mind to another, which can range from the use of basic signals to complex communication protocols.

Given the right communication protocol, information transfers can significantly improve the power of computation. By allowing concepts to reorganize while they are being sent back and forth from mind to mind, one can drastically improve the complexity of problem-solving algorithms.

Another intuition is that, in a society of specialists, all knowledge (information storage), thinking (information processing) and communication (information transmission) is distributed over individuals. To be able to extract the right piece of knowledge and apply it to the problem at hand, one should be able to query about any information, and have it transferred from one place to another in the network. This is essentially another way to formulate the communication problem. Given the right communication protocol, information transfers can significantly improve the power of computation. Recent advances have been suggesting that by allowing concepts to reorganize while they are being sent back and forth from mind to mind, one can drastically improve the complexity of problem-solving algorithms.

Raison d’Être of a Highly Connected Society

In the high connectivity network of human society, communication has the hidden potential to improve lives on a global scale. Image credit: Milvanuevatec

There is a reason why, as a scientist, I am constantly interacting with my colleagues. First, I have to point out that it doesn't have to be the case. Scientists could be working alone, locked in individual offices. Why bother talking to each other, after all? Anyone with an internet connection already has access to all the information needed to conduct research. Wouldn’t isolating yourself all the time increase your focus and productivity?

As a matter of fact, almost no field of research really does that. Apart from very few exceptions, everyone seems to find a huge intrinsic value to exchanging ideas with their peers. The reason for that may be that through repeated transfers from mind to mind, concepts seem to converge towards new ideas, theorems, and scientific theories.

“Through repeated transfers from mind to mind, concepts seem to converge towards new ideas, theorems, and scientific theories.”

That is not to say that no process needs to be isolated for a certain time. It might be helpful to isolate and take time to reflect for a while, just the way I am doing myself writing this post. But ultimately, to maximize its usefulness, information needs to be passed on, and spread to relevant nodes in the network. Waiting for your piece of work to be completely perfect before sharing it back to society may seem tempting, but there is value to doing it early. For those who would be interested in reading more about this, I have ongoing research which should get published soon, examining the space of networks in which communication helps achieving optimal results, under a certain set of conditions.

Evolvable Communication

In order to do so, one intriguing property appears to be that communication needs to be sufficiently "evolvable", which was confirmed by some early results from my own work. The best communication systems not only serve as good information maps onto useful concepts (knowledge in mathematics, physics, etc.) but they are also shaped so as to be able to naturally evolve into even better maps in the future. One should note that these results, although very exciting, are however preliminary, and will need further formal computational proof. But if confirmed, this may have very significant implications for the future of communication systems, for example in artificial intelligence (AI – I don't know how useful it is to spell that one out nowadays).

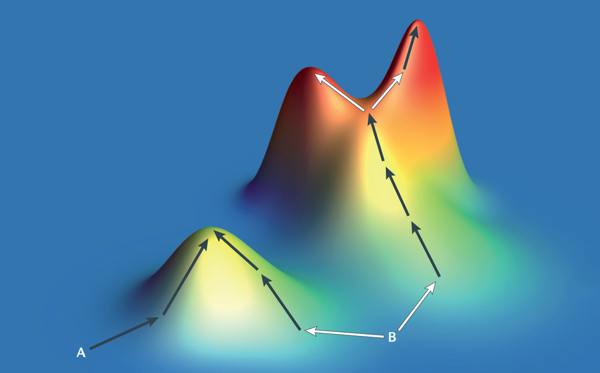

Illustration of fitness landscape gradient descent. The communication code B can evolve into two optima hills, but at each bifurcation lies a choice which should be pondered with the maximum of information.

To give you an idea, evolvable-communication-based AI would have the potential to generalize representations through social learning. This means that such an AI could have different parts of itself talk to each other, in turn becoming wiser through this process of "self-reflection". Pushing it just a bit further, this same paradigm may also lead to many more results, such as a new theory of the evolution of language, insights for the planning of future communication technology, a novel characterization of evolvable information transfers in the origin of life, and even new insights for a hypothetical communication system with extraterrestrial intelligence.

Evolvable communication is definitely a topic that I'll be developing more in my next posts (I hate to be teasing again, but adding full details would make this post too lengthy). Stay tuned for more, and in the meantime, I'd be happy to answer any question in the comments.

The problem dense sphere packing in multiple dimensions is closely related to finding optimal communication codes. To be continued in the next post!

Up next: hyperconnected AIs, language and sphere-packing

In my next post, I tackle the problem of finding optimal communication protocols, in a society where AI has become omnipresent. I will show how predicting future technology requires accurate analysis from machine learning, sphere-packing, and formal language and coding theories.

Olaf Witkowski is the Research Architect at YHouse. He is also a Research Scientist at the Earth-Life Science Institute (ELSI), part of the Tokyo Institute of Technology, and a Visiting Member at the Institute for Advanced Study (IAS) in Princeton. He was a Program Chair for the ALIFE 2018 conference, and leads multiple projects in AI and Artificial Life. His blog lives here.